August 6, 2025

5 min read

Zeljka Zorz

Microsoft’s Project Ire prototype autonomously detects malware with high accuracy, promising advances in AI-driven cybersecurity.

Project Ire: Microsoft’s AI-Powered Autonomous Malware Detection Agent

Microsoft is developing an AI agent focused on autonomous malware detection. The prototype, called Project Ire, is showing promising results, according to a company announcement on August 5, 2025. Tested on a dataset of known malicious and benign Windows drivers, Project Ire correctly identified 90% of all files and flagged only 2% of benign files as threats. In a separate test involving nearly 4,000 files that Microsoft’s automated systems could not classify and that had not been manually reviewed by expert reverse engineers, the prototype correctly flagged nearly 90% of malicious files. It maintained a low false positive rate of 4% but detected about a quarter of all actual malware.“While overall performance was moderate, this combination of accuracy and a low error rate suggests real potential for future deployment,” the research team noted.

About Project Ire

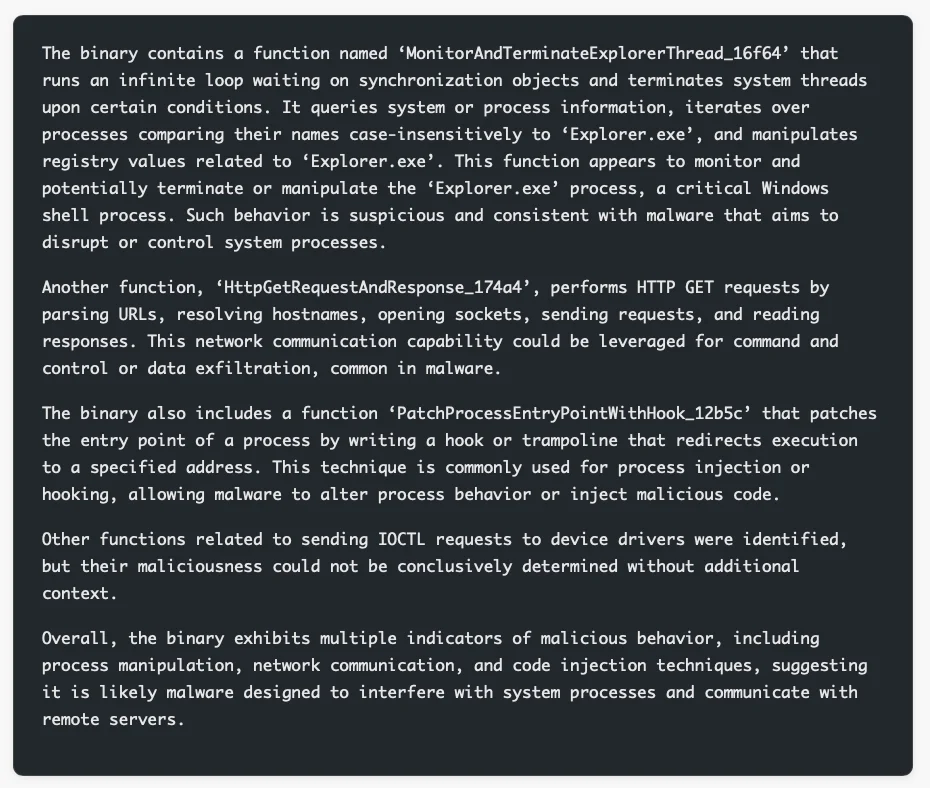

Currently in the prototype phase, Project Ire leverages advanced language models available through Azure AI Foundry alongside various reverse engineering and binary analysis tools. The evaluation process begins with automated reverse engineering to determine the file type, structure, and highlight areas requiring closer inspection. After triage, the system reconstructs the software’s control flow graph using frameworks such as angr and Ghidra. This graph maps program execution, enabling an iterative analysis of each function with the help of language models and specialized tools. Summaries of these analyses are compiled into a “chain of evidence” record, providing transparency into the system’s reasoning. This record allows security teams to review results and helps developers refine the system when misclassifications occur. Project Ire applies Microsoft’s public criteria to classify samples as malware, potentially unwanted applications, tampering software, or benign files. To verify findings, Project Ire can invoke a validator tool that cross-checks claims against the chain of evidence. This tool incorporates expert statements from malware reverse engineers on the Project Ire team. Using this evidence and its internal model, the system generates a final report classifying the sample as malicious or benign. There have been instances where the AI agent’s reasoning contradicted human experts but was later proven correct. Mike Walker, Research Manager at Microsoft, told Help Net Security that these cases demonstrate the complementary strengths of humans and AI in protection.

There have been instances where the AI agent’s reasoning contradicted human experts but was later proven correct. Mike Walker, Research Manager at Microsoft, told Help Net Security that these cases demonstrate the complementary strengths of humans and AI in protection.

“Our system is designed to capture risk reasoning at each step, and it’s critical to have a detailed audit trail of line-of-reasoning to allow for deeper investigation of the system.”Project Ire will be integrated into Microsoft Defender as a binary analyzer tool for threat detection and software classification. Ultimately, researchers hope Project Ire will autonomously detect novel malware directly in memory at scale.

Source: Originally published at Help Net Security on August 5, 2025.